Function pointers are among the most powerful

tools in C, but are a bit of a pain during the initial stages of

learning. This article demonstrates the basics of function pointers, and

how to use them to implement function callbacks in C. C++ takes a

slightly different route for callbacks, which is another journey

altogether.

A pointer is a special kind of variable that holds the address of

another variable. The same concept applies to function pointers, except

that instead of pointing to variables, they point to functions. If you

declare an array, say, int a[10]; then the array name a

will in most contexts (in an expression or passed as a function

parameter) “decay” to a non-modifiable pointer to its first element

(even though pointers and arrays are not equivalent while

declaring/defining them, or when used as operands of the sizeof operator). In the same way, for int func();, func decays to a non-modifiable pointer to a function. You can think of func as a const pointer for the time being.But can we declare a non-constant pointer to a function? Yes, we can — just like we declare a non-constant pointer to a variable:

int (*ptrFunc) (); |

ptrFunc is a pointer to a function that takes no

arguments and returns an integer. DO NOT forget to put in the

parenthesis, otherwise the compiler will assume that ptrFunc is a normal function name, which takes nothing and returns a pointer to an integer.Let’s try some code. Check out the following simple program:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| #include<stdio.h>/* function prototype */int func(int, int);int main(void){ int result; /* calling a function named func */ result = func(10,20); printf("result = %d\n",result); return 0;}/* func definition goes here */int func(int x, int y) {return x+y;} |

gcc -g -o example1 example1.c and invoke it with ./example1, the output is as follows:result = 30 |

func() the simple way. Let’s modify the program to call using a pointer to a function. Here’s the changed main() function:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| #include<stdio.h>int func(int, int);int main(void){ int result1,result2; /* declaring a pointer to a function which takes two int arguments and returns an integer as result */ int (*ptrFunc)(int,int); /* assigning ptrFunc to func's address */ ptrFunc=func; /* calling func() through explicit dereference */ result1 = (*ptrFunc)(10,20); /* calling func() through implicit dereference */ result2 = ptrFunc(10,20); printf("result1 = %d result2 = %d\n",result1,result2); return 0;}int func(int x, int y){ return x+y;} |

result1 = 30 result2 = 30 |

A simple callback function

At this stage, we have enough knowledge to deal with function callbacks. According to Wikipedia, “In computer programming, a callback is a reference to executable code, or a piece of executable code, that is passed as an argument to other code. This allows a lower-level software layer to call a subroutine (or function) defined in a higher-level layer.”Let’s try one simple program to demonstrate this. The complete program has three files:

callback.c, reg_callback.h and reg_callback.c.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| /* callback.c */#include<stdio.h>#include"reg_callback.h"/* callback function definition goes here */void my_callback(void){ printf("inside my_callback\n");}int main(void){ /* initialize function pointer to my_callback */ callback ptr_my_callback=my_callback; printf("This is a program demonstrating function callback\n"); /* register our callback function */ register_callback(ptr_my_callback); printf("back inside main program\n"); return 0;} |

1

2

3

| /* reg_callback.h */typedef void (*callback)(void);void register_callback(callback ptr_reg_callback); |

1

2

3

4

5

6

7

8

9

10

11

| /* reg_callback.c */#include<stdio.h>#include"reg_callback.h"/* registration goes here */void register_callback(callback ptr_reg_callback){ printf("inside register_callback\n"); /* calling our callback function my_callback */ (*ptr_reg_callback)(); } |

gcc -Wall -o callback callback.c reg_callback.c and ./callback:This is a program demonstrating function callbackinside register_callbackinside my_callbackback inside main program |

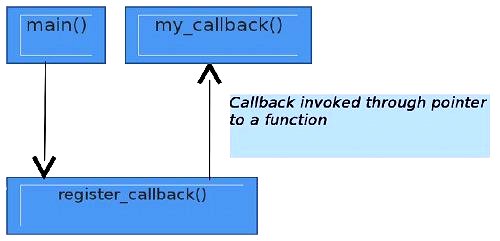

register_callback) where the callback function needs to be called.We could have written the above code in a single file, but have put the definition of the callback function in a separate file to simulate real-life cases, where the callback function is in the top layer and the function that will invoke it is in a different file layer. So the program flow is like what can be seen in Figure 1.

Figure 1: Program flow

This is exactly what the Wikipedia definition states.

Use of callback functions

One use of callback mechanisms can be seen here:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

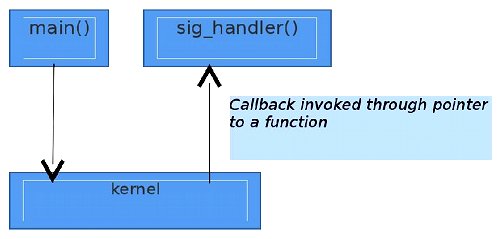

| / * This code catches the alarm signal generated from the kernel Asynchronously */#include <stdio.h>#include <signal.h>#include <unistd.h>struct sigaction act;/* signal handler definition goes here */void sig_handler(int signo, siginfo_t *si, void *ucontext){ printf("Got alarm signal %d\n",signo); /* do the required stuff here */}int main(void){ act.sa_sigaction = sig_handler; act.sa_flags = SA_SIGINFO; /* register signal handler */ sigaction(SIGALRM, &act, NULL); /* set the alarm for 10 sec */ alarm(10); /* wait for any signal from kernel */ pause(); /* after signal handler execution */ printf("back to main\n"); return 0;} |

Figure 2: Kernel callback

insertion_main.c, insertion_sort.c and insertion_sort.h),

shows this mechanism used to implement a trivial insertion sort

library. The flexibility lets users call any comparison function they

want.

1

2

3

4

| /* insertion_sort.h */typedef int (*callback)(int, int);void insertion_sort(int *array, int n, callback comparison); |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

| /* insertion_main.c */#include<stdio.h>#include<stdlib.h>#include"insertion_sort.h"int ascending(int a, int b){ return a > b;}int descending(int a, int b){ return a < b;}int even_first(int a, int b){ /* code goes here */}int odd_first(int a, int b){ /* code goes here */}int main(void){ int i; int choice; int array[10] = {22,66,55,11,99,33,44,77,88,0}; printf("ascending 1: descending 2: even_first 3: odd_first 4: quit 5\n"); printf("enter your choice = "); scanf("%d",&choice); switch(choice) { case 1: insertion_sort(array,10, ascending); break; case 2: insertion_sort(array,10, descending); case 3: insertion_sort(array,10, even_first); break; case 4: insertion_sort(array,10, odd_first); break; case 5: exit(0); default: printf("no such option\n"); } printf("after insertion_sort\n"); for(i=0;i<10;i++) printf("%d\t", array[i]); printf("\n"); return 0;} |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| /* insertion_sort.c */#include"insertion_sort.h"void insertion_sort(int *array, int n, callback comparison){ int i, j, key; for(j=1; j<=n-1;j++) { key=array[j]; i=j-1; while(i >=0 && comparison(array[i], key)) { array[i+1]=array[i]; i=i-1; } array[i+1]=key; }} |