Covers Embedded system related topics Microcontroller annd its peripherals, Basic linux and windows commands, UART,I2C,SPI, CAN etc protocols. GSM, GPRS and 3G mobile communication system. Basic differences. Operating system basic fundamentals.

Monday, December 31, 2012

Saturday, December 8, 2012

Wednesday, October 17, 2012

Embedded Data Communcation Protocols

Developers have a range of wired and wireless mechanisms to connect microcontrollers to their peers (Table 1).

On-chip peripherals often dictate the options, but many of the

interfaces are accessible via off-chip peripherals. External line

drivers and support chips are frequently required as well.

There’s a maximum speed/distance tradeoff with some interfaces such as I2C. There also are many proprietary interfaces like 1-Wire from Maxim Integrated Products. Likewise, many high-performance DSPs and microcontrollers have proprietary high-speed interfaces.

Some Analog Devices DSPs have high-speed serial links designed for connecting multiple DSP chips (see “Dual Core DSP Tackles Video Chores”). XMOS has proprietary serial links that allow processor chips to be combined in a mesh network (see “Multicore And Soft Peripherals Target Multimedia Applications”).

PCI Express is used for implementing redundant interfaces often found in storage applications using the PCI Express non-transparent (NT) bridging support. High-speed interfaces like Serial RapidIO and InfiniBand are built into higher-end microprocessors, but they tend to be out of reach for most microcontrollers since they push the upper end of the bandwidth spectrum. Microcontroller speeds are moving up, but only high-end versions touch the gigahertz range where microprocessors are king.

Ethernet is in the mix because of its compatibility from the low end at 10 Mbits/s. Also, some micros have 10- or 10/100-Mbit/s interfaces as options. In fact, this end of the Ethernet spectrum is the basis for many automation control networks where small micro nodes provide sensor and control support (see “Consider Fast Ethernet For Your Industrial Applications”). Gigabit Ethernet is ubiquitous for PCs, hubs, and switches with 10G Ethernet.

This article primarily looks at hardware and low-level protocols. Many applications can be built utilizing this level of support. Higher-level protocols like CANopen, DeviceNet, and EtherNet/IP target industrial control applications.

These serial interfaces just define the electrical and signalling characteristics. This is useful because the serial ports on most microcontrollers can be configured to handle a range of low-level protocols. The next level up allows asynchronous and synchronous protocols like High Level Data Link (HDLC) to ride atop the hardware. Higher-level protocols like Modbus employ these standards.

SPI is a serial interface that is primarily used in a master/slave configuration with the microcontroller controlling external peripheral devices. Designed to make devices as simple as possible, it’s essentially a shift register. These days the slave might be a micro. SPI can be used in a multimaster mode, but it requires extra logic. It also tends to be non-standard and used in very few applications that normally need to share an SPI device between two hosts.

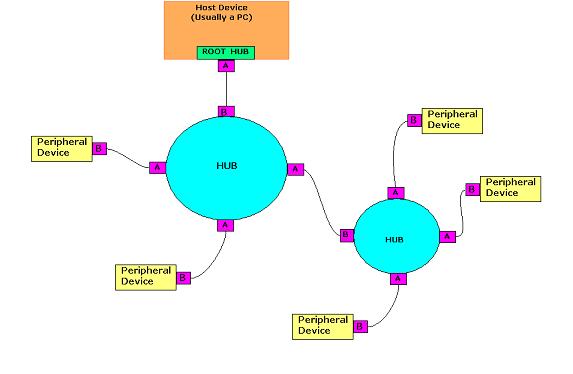

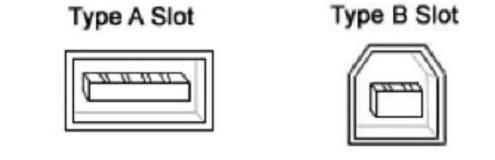

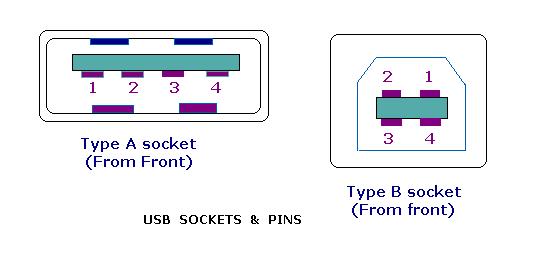

USB is in the same boat as serial ports and SPI. It’s ubiquitous for peripheral devices from mice to printers. It may look like a network, but it is host-driven. USB-on-The-Go (OTG) allows a device to become a host, enabling a camera to control a printer or be attached to a PC as a storage device. USB 3.0 even operates in full duplex, but the host is still in charge.

1. An I2C network uses two control lines and is typically implemented using open drain drivers. There is a range of I2C protocols based around 7-bit and 10-bit device addressing.

I2C normally uses an open-drain interface requiring at

least one pull-up resistor per wire. Longer runs often place resistors

at either end of the wire, allowing a dominant (0) and recessive (1)

state in controller area networking (CAN) parlance. More than one device

can invoke the dominant state without harming other devices. In other

point-to-point interfaces, simultaneous invocations would tend to fry

the drivers. The logic levels are arbitrary but often related to

voltages, so 0 (logical), 0 V, and ground seem to work together nicely.

I have shown the logical connection to the two wires with separate connections for the transmit and receive buffers. In general, the transmit and receive lines are tied together within the microcontroller that exposes a single connection for the world for each wire because the transmit buffer drives the bus directly. This is different from CAN, which normally uses external buffers. Note that CAN uses two wires but in a balanced mode for a single signal versus the two signals for I2C.

The pull-up resistors hold the I2C bus in the recessive state (1). The transmitters with their open drain generate the dominant state (0). Any number of transmitters can be on at one time, but the amount of current will be the same. It is simply split between all active devices.

The basic I2C protocol is based around a variable-length packet of 8-bit bytes. The packet’s special start and stop sequence is easy to recognize. The clock is then used to mark the data being sent.

The packet starts with a header that’s 1 or 2 bytes depending upon the type of addressing being used. A single byte provides 7-bit addressing supporting 128 addresses. Of these, 16 are reserved, allowing for 112 devices.

Four of the reserved addresses are used for 10-bit addressing. The first byte contains two bits of the address, and the second byte contains the rest of the address. Most devices support and recognize both addressing modes. If not, they utilize one or the other.

A negative acknowledgement (NAK) bit that a device can use to provide a NAK follows the bits in each byte. Most devices do not. Likewise, devices can extend or stretch the clock by driving the clock line to the dominant mode. This is normally done where timing is an issue and a device needs some additional time to generate or process data. NAKs and clock stretching aren’t used often, and the host must support them.

The last bit (R) of the first byte of the packet specifies the direction of the data transfer. If the value is 1, the selected device sends the subsequent data. The host controls the clock, and the device needs to keep up unless clock stretching is used. The data has no error checking associated with it, although error checking could be done at a higher level. The host controls the amount of data.

A device can utilize more than one address and typically does. Different addresses are used for controlling different registers on a device such as an address register. This is often the case for I2C serial memories where an address counter register is loaded first. Subsequent reads or writes increment the address register with each byte being sent or received.

I2C has a number of close relations including System Management Bus (SMBus) and Power Management Bus (PMBus), an SMBus variant. The Intelligent Platform Management Interface uses SMBus (see “Fundamentals Of The Intelligent Platform Management Interface (IPMI)”). Multimaster operation comes into play in applications like IPMI and SMBus.

There are two ways to approach the problem. The first is to use a token passing scheme to avoid conflicts. The other is to use collision detection, which is the most common and standardized approach. In collision detection, the master tracks what it transmits and what is on the bus. If they differ, then there’s a collision and the master needs to stop transmitting.

There is no effect on the other master driving the bus as long as that master detecting the collision stops immediately. This is true even if multiple masters are transmitting. For example, in a 10-bit address mode, the masters may not detect the problem for half a dozen bits into the first byte assuming the clocks are close or in sync.

Slave devices don’t have to worry about multimaster operation because they will always operate in the same fashion. It isn’t possible to initiate a read and write to a device at the same time. One direction always has priority and the slave device responds accordingly.

All masters must support multimaster operation. A non-multimaster master on an I2C network will eventually stomp all over the transmission of one of its peers. Also, there is no priority or balancing mechanism, so an I2C network won’t be a good choice if lots of collisions are anticipated.

Like SPI, I2C was designed to require minimal hardware, although more than SPI. These days the amount of hardware is less of an issue as software and higher-level protocols become more important. Also, like SPI, I2C is easily implemented in software.

I2C hardware is available to handle features like address recognition and multimaster support. Address recognition sometimes can be used to wake a device from a deep sleep. Multimaster support often uses DMA support and automatic retransmission.

A German consortium started Profibus in 1987. It supports multidrop RS-485, fiber optics, and Manchester Bus Power (MBP) connections. Profinet is a high-level protocol suitable for TCP/IP and Ethernet. DF1 is another controller area network which outdated protocol.

Robert Bosch GmbH developed CAN in 1983. It uses a single signal but is typically implemented using a balanced wire pair (Fig. 2). CAN initially targeted automotive applications where separate, robust drivers were a requirement. A host then provides separate transmit and receive signals to external drivers that are connected to the CAN bus.

2. CAN devices are normally connected to a CAN network via transceivers that provide isolation as well as drive capabilities because CAN networks are typically utilized in eletrically noisy environments such as automotive applications.

Wired CAN is the most common network implementation, but optical

versions are available as well. Most CAN drivers provide varying levels

of bus isolation. Some provide optical isolation. The microcontroller

and half the driver chip then can share a power supply while the bus

uses another. This is very handy in electrically noisy environments such

as automotive applications.

The CAN standard now defines two data frames in addition to other control and status frames with a similar format (Table 2). The two different data frames allow for an 11-bit and 29-bit address. Both are limited to 16 bytes of data. That may not seem like much, but CAN is designed for control applications where many messages with a small amount of data will be common. Operations such as reprogramming the flash memory of a device are possible, but they take a lot of packets.

CAN also turns the conventional addressing scheme on its head. I2C and conventional networks like Ethernet, Serial RapidIO, and InfiniBand employ a destination address. I2C doesn’t have a source address since it’s usually a single master platform, but the other networks typically include a source address as well.

With CAN, the address fields are used to describe the contents of the packet, not the source or destination. In fact, a packet may be sent without any device on the network doing anything with it.

Typically, a CAN packet is generated in response to an external event such as a switch closing or a sensor changing. Each event has its own address, so more than one device can send the same type of message. It also means that a packet is broadcast and any number of devices might utilize the information. A single event like a switch closure could initiate actions on a number of devices such as turning on a light or unlocking a door.

An application normally allocates addresses in blocks so they can essentially include data when simple events are being specified. The packet data is used when more information is required. For example, the address may be used to indicate an error, and the data provides the type of error.

CAN includes error checking for each packet via a 15-bit cyclic redundancy check (CRC). The CRC is on the entire packet, not just the data field, which might not even exist. There’s also support for acknowledgement and retransmission among other features. Most of these features are built into CAN hardware, though it’s possible to implement CAN in bit-banging software.

Usually, CAN hardware has a set of send and receive buffers that may be implemented with RAM and DMA support. Addresses normally are recognized with masks on the receive side along with various settings so the hardware can respond automatically. The CAN hardware then can capture packets of interest while ignoring others.

Although CAN packet addresses describe the contents, they can be used as destination addresses as well. In this case, a unique device would recognize a particular address or typically a batch of addresses. Functions can be associated with the address or specified in the packets. The higher-level protocols used on a CAN network would control how this works.

Higher-level protocols built on CAN include protocols like CANopen and DeviceNet. Initially based on CAN, these protocols also have been mapped to other networks like Ethernet. The Open DeviceNet Vendors Association (ODVA) manages DeviceNet. It’s now part of the Common Industrial Protocol (CIP), which also includes EtherNet/IP, ControlNet, and CompoNet.

CAN in Automation (CiA) manages CANopen. Like DeviceNet, CANopen provides a way for vendor hardware to coexist. Devices must support the higher-level protocol. These frameworks provide a way to query and control devices including high-level interfaces to standard device types.

OpenCAN should not be confused with CANopen, though. OpenCAN is an open-source project that provides an application programming interface (API) for controlling a CAN network.

CAN’s speed and data capacity weren’t enough for some of the latest demanding automotive applications including drive-by-X, so Flexray was created. This network is strictly for automotive use and is supported on a limited number of microcontrollers. It runs at 10 Mbits/s and supports time-trigered and event-triggered operation. Like CAN, it’s fault tolerant. It’s also deterministic.

Likewise, the local interconnect network (LIN) is an automotive network that also can be used in non-automotive applications. It uses a master/slave architecture with up to 16 slave devices. It’s normally tied to a CAN backbone with LIN providing lower-cost control support such as panel switch and button input. It’s slow and uses a single wire. It also uses a checksum for error detection.

Media Oriented Systems Transport (MOST) is a multimedia network for automotive applications used in some high-end vehicles. It runs up to 150 Mbits/s. However, it’s being challenged by Ethernet (see “Automotive Ethernet Arrives”) from the OPEN Alliance (One-Pair Ether-Net). Ethernet uses a single twisted pair that can deliver power too. It runs up to 100 Mbits/s and uses standard Ethernet protocols. Also, it supports the 802.1BA audio/video bridging (AVB) standard.

J1939:

Society of Automotive Engineers SAE J1939 is the vehicle bus standard used for communication and diagnostics among vehicle components, originally by the car and heavy duty truck industry in the United States.

SAE J1939 is used in the commercial vehicle area for communication throughout the vehicle. With a different physical layer it is used between the tractor and trailer. This is specified in ISO 11992.

SAE J1939 defines five layers in the 7-layer OSI network model, and this includes the CAN 2.0b specification (using only the 29-bit/"extended" identifier) for the physical and data-link layers. Under J1939/11 and J1939/15 the baud rate is specified as 250 kbit/s, with J1939/14 specifing 500 kbit/s. The session and presentation layers are not part of the specification.

10-Mbit/s Ethernet and 100-Mbit/s Ethernet are alive and well in microcontrollers even though most microprocessor and SoC systems have moved on to 1-Gbit/s Ethernet. External Ethernet interface chips for the low end often have SPI, I2C, and low-pin-count (LPC) interfaces. And, Ethernet supports the IEEE-1588 time synchronization standard, which can be used in embedded applications to synchronize the clocks on each node (see “Keeping Time Ethernet-Style”).

More advanced applications may need real-time Ethernet support. Standards such as EtherCAT provide an alternative to standard Ethernet (see “Using The EtherCAT Industrial Communication Protocol In Designs”). EtherCAT can be part of a regular Ethernet network and data can flow between the two networks, but the EtherCAT side has more control over the protocol, allowing data to be analyzed on the fly.

Industrial Ethernet networks provide better timing and error support than the standard Ethernet implementations that leave most of these details to software (see “Industrial-Strength Networking”). Hardware allows Ethernet to be used in applications such as motion control that would be difficult with conventional Ethernet support.

As noted, most of the field bus protocols support Ethernet. Some extend to the industrial Ethernet hardware platforms.

The protocol stack is often one of the determining factors in whether low-end microcontrollers can handle wireless communication. The range of support is why there are proprietary alternatives based on 802.15.4 like Texas Instruments’ SimpliciTI, Cypress Semiconductor’s CyFi, and Microchip’s MiWi (see “Should You Choose Standard Or Custom P2P Wireless?”). These protocol stacks tend to be smaller and the overhead lower for lower-power operation.

Wireless operation can be a single-chip solution at the low end where Bluetooth and 802.15.4 platforms operate, but Wi-Fi tends to be a separate chip. Sometimes some or all of the protocol can be offloaded to the support chip or module. Some modules utilize a serial interface and an AT-style command set to support anything from sending packets to e-mail.

The protocol stacks or modules tend to hide the details of the wireless interface from most developers, but they still will be responsible for the network topology. Bluetooth provides a master/slave hierarchy that is relatively simple.

Other platforms, especially proprietary ones, provide point-to-point communication or star network support. More advanced wireless protocols like ZigBee support mesh networking so data can be passed from one node to another. This type of router operation is how the Internet works, but in a wireless only form.

Wi-Fi, especially 802.11b/g, usually can be supported by any microcontroller that could also support Ethernet, which is quite a few. A system generally would not support both unless it was for a gateway application.

The Wi-Fi modules can be extremely compact. I recently used a Gumstix AreoStorm module to control an iRobot Create using the TurtleCore module, also from Gumstix (see “TurtleCore Tacks Cortex-A8 On To iRobot Create”). The AreoStorm is based on the Texas Instruments Sitara AM3703, which in turn is based on Arm’s Cortex-A8 core. It includes a Wi-Fi (802.11b/g) and Bluetooth wireless module based on Marvell’s W2CBW003C chip.

Embedded developers may also want to take a closer look at WiFi Direct, which provides Bluetooth-style device connectivity between Wi-Fi devices. WiFi Direct support depends on software and hardware, so not all platforms can support it.

Cellular is another wireless platform. Its support tends to be at the module level since it requires integration with service providers.

Super Micro Computer’s SSEG2252P Gigabit Ethernet switch boasts 52 ports. Its 400-W PoE budget can be selectively delivered to any set of ports. The maximum power per port is 34.2 W, although the average is 7.5 W if 48 ports are supported. Each port has a priority, so if the system is over budget, low-priority links won’t get power.

Wireless solutions often work well with projected power. There are many options in this area. PowerCast provides a range of wireless power broadcasting solutions that can support an 8-bit Microchip PIC (see “PIC Module Runs Off RF Power”) or charging a battery for a Texas Instruments MSP 430 (see “Battery Charged Remotely Using RF Power”).

In general, these types of wireless power solutions have a transmitter and a receiver. The receiver provides power and sometimes battery backup to a device. Another alternative, power scavenging, tends to work well for applications that require very little power since the power source is often limited. It may be solar, vibrational, or a host of other mechanical sources.

The application often will dictate the choice of network and power source, though designers sometimes have the flexibility to choose. Knowing the alternatives is a good starting point.

There’s a maximum speed/distance tradeoff with some interfaces such as I2C. There also are many proprietary interfaces like 1-Wire from Maxim Integrated Products. Likewise, many high-performance DSPs and microcontrollers have proprietary high-speed interfaces.

Some Analog Devices DSPs have high-speed serial links designed for connecting multiple DSP chips (see “Dual Core DSP Tackles Video Chores”). XMOS has proprietary serial links that allow processor chips to be combined in a mesh network (see “Multicore And Soft Peripherals Target Multimedia Applications”).

PCI Express is used for implementing redundant interfaces often found in storage applications using the PCI Express non-transparent (NT) bridging support. High-speed interfaces like Serial RapidIO and InfiniBand are built into higher-end microprocessors, but they tend to be out of reach for most microcontrollers since they push the upper end of the bandwidth spectrum. Microcontroller speeds are moving up, but only high-end versions touch the gigahertz range where microprocessors are king.

Ethernet is in the mix because of its compatibility from the low end at 10 Mbits/s. Also, some micros have 10- or 10/100-Mbit/s interfaces as options. In fact, this end of the Ethernet spectrum is the basis for many automation control networks where small micro nodes provide sensor and control support (see “Consider Fast Ethernet For Your Industrial Applications”). Gigabit Ethernet is ubiquitous for PCs, hubs, and switches with 10G Ethernet.

This article primarily looks at hardware and low-level protocols. Many applications can be built utilizing this level of support. Higher-level protocols like CANopen, DeviceNet, and EtherNet/IP target industrial control applications.

Peripheral Networks:

RS-232 normally isn’t used for networks. It’s one of the most common ways of hooking up devices, though, and embedded motherboards sport lots of serial ports. RS-422/425/485 can run point-to-point, but their multidrop capability has been used in the past and continues to be used today. There’s a definite tradeoff in maximum baud rate versus distance, but these networks are still very useful.These serial interfaces just define the electrical and signalling characteristics. This is useful because the serial ports on most microcontrollers can be configured to handle a range of low-level protocols. The next level up allows asynchronous and synchronous protocols like High Level Data Link (HDLC) to ride atop the hardware. Higher-level protocols like Modbus employ these standards.

SPI is a serial interface that is primarily used in a master/slave configuration with the microcontroller controlling external peripheral devices. Designed to make devices as simple as possible, it’s essentially a shift register. These days the slave might be a micro. SPI can be used in a multimaster mode, but it requires extra logic. It also tends to be non-standard and used in very few applications that normally need to share an SPI device between two hosts.

USB is in the same boat as serial ports and SPI. It’s ubiquitous for peripheral devices from mice to printers. It may look like a network, but it is host-driven. USB-on-The-Go (OTG) allows a device to become a host, enabling a camera to control a printer or be attached to a PC as a storage device. USB 3.0 even operates in full duplex, but the host is still in charge.

I2C Networks:

Developers often use I2C for peripheral support like that provided by SPI and USB. In its basic form, it’s a master/slave architecture. But it also can operate in a multimaster mode that allows a network of devices to communicate with each other. The I2C protocol (Fig. 1) utilizes two wires: SDA (data) and SCL (clock). In its simplest form, a single master controls the clock and initiates communication with slave devices.

1. An I2C network uses two control lines and is typically implemented using open drain drivers. There is a range of I2C protocols based around 7-bit and 10-bit device addressing.

I have shown the logical connection to the two wires with separate connections for the transmit and receive buffers. In general, the transmit and receive lines are tied together within the microcontroller that exposes a single connection for the world for each wire because the transmit buffer drives the bus directly. This is different from CAN, which normally uses external buffers. Note that CAN uses two wires but in a balanced mode for a single signal versus the two signals for I2C.

The pull-up resistors hold the I2C bus in the recessive state (1). The transmitters with their open drain generate the dominant state (0). Any number of transmitters can be on at one time, but the amount of current will be the same. It is simply split between all active devices.

The basic I2C protocol is based around a variable-length packet of 8-bit bytes. The packet’s special start and stop sequence is easy to recognize. The clock is then used to mark the data being sent.

The packet starts with a header that’s 1 or 2 bytes depending upon the type of addressing being used. A single byte provides 7-bit addressing supporting 128 addresses. Of these, 16 are reserved, allowing for 112 devices.

Four of the reserved addresses are used for 10-bit addressing. The first byte contains two bits of the address, and the second byte contains the rest of the address. Most devices support and recognize both addressing modes. If not, they utilize one or the other.

A negative acknowledgement (NAK) bit that a device can use to provide a NAK follows the bits in each byte. Most devices do not. Likewise, devices can extend or stretch the clock by driving the clock line to the dominant mode. This is normally done where timing is an issue and a device needs some additional time to generate or process data. NAKs and clock stretching aren’t used often, and the host must support them.

The last bit (R) of the first byte of the packet specifies the direction of the data transfer. If the value is 1, the selected device sends the subsequent data. The host controls the clock, and the device needs to keep up unless clock stretching is used. The data has no error checking associated with it, although error checking could be done at a higher level. The host controls the amount of data.

A device can utilize more than one address and typically does. Different addresses are used for controlling different registers on a device such as an address register. This is often the case for I2C serial memories where an address counter register is loaded first. Subsequent reads or writes increment the address register with each byte being sent or received.

I2C has a number of close relations including System Management Bus (SMBus) and Power Management Bus (PMBus), an SMBus variant. The Intelligent Platform Management Interface uses SMBus (see “Fundamentals Of The Intelligent Platform Management Interface (IPMI)”). Multimaster operation comes into play in applications like IPMI and SMBus.

There are two ways to approach the problem. The first is to use a token passing scheme to avoid conflicts. The other is to use collision detection, which is the most common and standardized approach. In collision detection, the master tracks what it transmits and what is on the bus. If they differ, then there’s a collision and the master needs to stop transmitting.

There is no effect on the other master driving the bus as long as that master detecting the collision stops immediately. This is true even if multiple masters are transmitting. For example, in a 10-bit address mode, the masters may not detect the problem for half a dozen bits into the first byte assuming the clocks are close or in sync.

Slave devices don’t have to worry about multimaster operation because they will always operate in the same fashion. It isn’t possible to initiate a read and write to a device at the same time. One direction always has priority and the slave device responds accordingly.

All masters must support multimaster operation. A non-multimaster master on an I2C network will eventually stomp all over the transmission of one of its peers. Also, there is no priority or balancing mechanism, so an I2C network won’t be a good choice if lots of collisions are anticipated.

Like SPI, I2C was designed to require minimal hardware, although more than SPI. These days the amount of hardware is less of an issue as software and higher-level protocols become more important. Also, like SPI, I2C is easily implemented in software.

I2C hardware is available to handle features like address recognition and multimaster support. Address recognition sometimes can be used to wake a device from a deep sleep. Multimaster support often uses DMA support and automatic retransmission.

Controller Networking:

Field-bus networks have been used in process automation for decades. Modicon initially developed Modbus in 1979. It could operate on a serial RS-232/422/425 connection including a multidrop mode. It supports a 7-bit ASCII mode and an 8-bit remote terminal unit (RTU) mode. The high-level Modbus protocol was moved on to Ethernet with Modbus TCP/IP.A German consortium started Profibus in 1987. It supports multidrop RS-485, fiber optics, and Manchester Bus Power (MBP) connections. Profinet is a high-level protocol suitable for TCP/IP and Ethernet. DF1 is another controller area network which outdated protocol.

Robert Bosch GmbH developed CAN in 1983. It uses a single signal but is typically implemented using a balanced wire pair (Fig. 2). CAN initially targeted automotive applications where separate, robust drivers were a requirement. A host then provides separate transmit and receive signals to external drivers that are connected to the CAN bus.

2. CAN devices are normally connected to a CAN network via transceivers that provide isolation as well as drive capabilities because CAN networks are typically utilized in eletrically noisy environments such as automotive applications.

The CAN standard now defines two data frames in addition to other control and status frames with a similar format (Table 2). The two different data frames allow for an 11-bit and 29-bit address. Both are limited to 16 bytes of data. That may not seem like much, but CAN is designed for control applications where many messages with a small amount of data will be common. Operations such as reprogramming the flash memory of a device are possible, but they take a lot of packets.

CAN also turns the conventional addressing scheme on its head. I2C and conventional networks like Ethernet, Serial RapidIO, and InfiniBand employ a destination address. I2C doesn’t have a source address since it’s usually a single master platform, but the other networks typically include a source address as well.

With CAN, the address fields are used to describe the contents of the packet, not the source or destination. In fact, a packet may be sent without any device on the network doing anything with it.

Typically, a CAN packet is generated in response to an external event such as a switch closing or a sensor changing. Each event has its own address, so more than one device can send the same type of message. It also means that a packet is broadcast and any number of devices might utilize the information. A single event like a switch closure could initiate actions on a number of devices such as turning on a light or unlocking a door.

An application normally allocates addresses in blocks so they can essentially include data when simple events are being specified. The packet data is used when more information is required. For example, the address may be used to indicate an error, and the data provides the type of error.

CAN includes error checking for each packet via a 15-bit cyclic redundancy check (CRC). The CRC is on the entire packet, not just the data field, which might not even exist. There’s also support for acknowledgement and retransmission among other features. Most of these features are built into CAN hardware, though it’s possible to implement CAN in bit-banging software.

Usually, CAN hardware has a set of send and receive buffers that may be implemented with RAM and DMA support. Addresses normally are recognized with masks on the receive side along with various settings so the hardware can respond automatically. The CAN hardware then can capture packets of interest while ignoring others.

Although CAN packet addresses describe the contents, they can be used as destination addresses as well. In this case, a unique device would recognize a particular address or typically a batch of addresses. Functions can be associated with the address or specified in the packets. The higher-level protocols used on a CAN network would control how this works.

Higher-level protocols built on CAN include protocols like CANopen and DeviceNet. Initially based on CAN, these protocols also have been mapped to other networks like Ethernet. The Open DeviceNet Vendors Association (ODVA) manages DeviceNet. It’s now part of the Common Industrial Protocol (CIP), which also includes EtherNet/IP, ControlNet, and CompoNet.

CAN in Automation (CiA) manages CANopen. Like DeviceNet, CANopen provides a way for vendor hardware to coexist. Devices must support the higher-level protocol. These frameworks provide a way to query and control devices including high-level interfaces to standard device types.

OpenCAN should not be confused with CANopen, though. OpenCAN is an open-source project that provides an application programming interface (API) for controlling a CAN network.

Automotive Networking

CAN started out in the automotive industry and is still heavily used there. But, as noted, CAN also is utilized a lot in other areas such as process control.CAN’s speed and data capacity weren’t enough for some of the latest demanding automotive applications including drive-by-X, so Flexray was created. This network is strictly for automotive use and is supported on a limited number of microcontrollers. It runs at 10 Mbits/s and supports time-trigered and event-triggered operation. Like CAN, it’s fault tolerant. It’s also deterministic.

Likewise, the local interconnect network (LIN) is an automotive network that also can be used in non-automotive applications. It uses a master/slave architecture with up to 16 slave devices. It’s normally tied to a CAN backbone with LIN providing lower-cost control support such as panel switch and button input. It’s slow and uses a single wire. It also uses a checksum for error detection.

Media Oriented Systems Transport (MOST) is a multimedia network for automotive applications used in some high-end vehicles. It runs up to 150 Mbits/s. However, it’s being challenged by Ethernet (see “Automotive Ethernet Arrives”) from the OPEN Alliance (One-Pair Ether-Net). Ethernet uses a single twisted pair that can deliver power too. It runs up to 100 Mbits/s and uses standard Ethernet protocols. Also, it supports the 802.1BA audio/video bridging (AVB) standard.

J1939:

Society of Automotive Engineers SAE J1939 is the vehicle bus standard used for communication and diagnostics among vehicle components, originally by the car and heavy duty truck industry in the United States.

SAE J1939 is used in the commercial vehicle area for communication throughout the vehicle. With a different physical layer it is used between the tractor and trailer. This is specified in ISO 11992.

SAE J1939 defines five layers in the 7-layer OSI network model, and this includes the CAN 2.0b specification (using only the 29-bit/"extended" identifier) for the physical and data-link layers. Under J1939/11 and J1939/15 the baud rate is specified as 250 kbit/s, with J1939/14 specifing 500 kbit/s. The session and presentation layers are not part of the specification.

Ethernet Networks:

Arcnet and Token Ring are long gone. InfiniBand and Serial RapidIO take the high ground targeting applications like supercomputing and communications. Applications that use these networks have dedicated controllers or a very high-performance microprocessor or system-on-a-chip (SoC). Ethernet can play with these big boys, but it’s found where they aren’t—on microcontrollers.10-Mbit/s Ethernet and 100-Mbit/s Ethernet are alive and well in microcontrollers even though most microprocessor and SoC systems have moved on to 1-Gbit/s Ethernet. External Ethernet interface chips for the low end often have SPI, I2C, and low-pin-count (LPC) interfaces. And, Ethernet supports the IEEE-1588 time synchronization standard, which can be used in embedded applications to synchronize the clocks on each node (see “Keeping Time Ethernet-Style”).

More advanced applications may need real-time Ethernet support. Standards such as EtherCAT provide an alternative to standard Ethernet (see “Using The EtherCAT Industrial Communication Protocol In Designs”). EtherCAT can be part of a regular Ethernet network and data can flow between the two networks, but the EtherCAT side has more control over the protocol, allowing data to be analyzed on the fly.

Industrial Ethernet networks provide better timing and error support than the standard Ethernet implementations that leave most of these details to software (see “Industrial-Strength Networking”). Hardware allows Ethernet to be used in applications such as motion control that would be difficult with conventional Ethernet support.

As noted, most of the field bus protocols support Ethernet. Some extend to the industrial Ethernet hardware platforms.

Wireless Networks

Developers can find as much variety in microcontroller-supported wireless networks as in wired networks. Many proprietary solutions operate in the industrial, scientific, and medical (ISM) bands. Bluetooth, ZWave, and 802.15.4, which includes ZigBee and Wi-Fi, also are available.The protocol stack is often one of the determining factors in whether low-end microcontrollers can handle wireless communication. The range of support is why there are proprietary alternatives based on 802.15.4 like Texas Instruments’ SimpliciTI, Cypress Semiconductor’s CyFi, and Microchip’s MiWi (see “Should You Choose Standard Or Custom P2P Wireless?”). These protocol stacks tend to be smaller and the overhead lower for lower-power operation.

Wireless operation can be a single-chip solution at the low end where Bluetooth and 802.15.4 platforms operate, but Wi-Fi tends to be a separate chip. Sometimes some or all of the protocol can be offloaded to the support chip or module. Some modules utilize a serial interface and an AT-style command set to support anything from sending packets to e-mail.

The protocol stacks or modules tend to hide the details of the wireless interface from most developers, but they still will be responsible for the network topology. Bluetooth provides a master/slave hierarchy that is relatively simple.

Other platforms, especially proprietary ones, provide point-to-point communication or star network support. More advanced wireless protocols like ZigBee support mesh networking so data can be passed from one node to another. This type of router operation is how the Internet works, but in a wireless only form.

Wi-Fi, especially 802.11b/g, usually can be supported by any microcontroller that could also support Ethernet, which is quite a few. A system generally would not support both unless it was for a gateway application.

The Wi-Fi modules can be extremely compact. I recently used a Gumstix AreoStorm module to control an iRobot Create using the TurtleCore module, also from Gumstix (see “TurtleCore Tacks Cortex-A8 On To iRobot Create”). The AreoStorm is based on the Texas Instruments Sitara AM3703, which in turn is based on Arm’s Cortex-A8 core. It includes a Wi-Fi (802.11b/g) and Bluetooth wireless module based on Marvell’s W2CBW003C chip.

Embedded developers may also want to take a closer look at WiFi Direct, which provides Bluetooth-style device connectivity between Wi-Fi devices. WiFi Direct support depends on software and hardware, so not all platforms can support it.

Cellular is another wireless platform. Its support tends to be at the module level since it requires integration with service providers.

Powering Network Devices

Power delivery is frequently overlooked in microcontroller networks. It isn’t an issue for on-board networks or rack-based boards, but it does arise for remote devices. Solutions often are proprietary. For Ethernet-based networks, a standard interface is available. Power over Ethernet (PoE) uses conventional CAT5 and CAT6 cables to provide more than 25 W to a device.Super Micro Computer’s SSEG2252P Gigabit Ethernet switch boasts 52 ports. Its 400-W PoE budget can be selectively delivered to any set of ports. The maximum power per port is 34.2 W, although the average is 7.5 W if 48 ports are supported. Each port has a priority, so if the system is over budget, low-priority links won’t get power.

Wireless solutions often work well with projected power. There are many options in this area. PowerCast provides a range of wireless power broadcasting solutions that can support an 8-bit Microchip PIC (see “PIC Module Runs Off RF Power”) or charging a battery for a Texas Instruments MSP 430 (see “Battery Charged Remotely Using RF Power”).

In general, these types of wireless power solutions have a transmitter and a receiver. The receiver provides power and sometimes battery backup to a device. Another alternative, power scavenging, tends to work well for applications that require very little power since the power source is often limited. It may be solar, vibrational, or a host of other mechanical sources.

The application often will dictate the choice of network and power source, though designers sometimes have the flexibility to choose. Knowing the alternatives is a good starting point.

Various Wireless Technologies

Wireless has become a major feature for just about every new

electronic product. It adds flexibility, convenience, and remote

monitoring and control without expensive wiring and cabling. The range

of applications is staggering, from simple toys to consumer electronic

products to industrial automation.

This great rush to make everything wireless has produced a flood of different wireless technologies and protocols. Some were established primarily for one application, while others are more general and have many uses.

ANT+ ANT and ANT+ are proprietary wireless sensor network technologies used in the collection and transfer of sensor data. As a type of personal-area network (PAN), ANT’s primary applications include sports, wellness, and home health. For example, it’s used in heart-rate monitors, speedometers, calorimeters, blood pressure monitors, position tracking, homing devices, and thermometers. Typical radios are built into sports watches and equipment like workout machines.

The technology divides the 2.4-GHz industrial, scientific, and medical (ISM) band into 1-MHz channels. The radios have a basic data rate of 1 Mbit/s. A time division multiplexing (TDM) scheme accommodates multiple sensors. ANT+ supports star, tree, mesh, and peer-to-peer topologies. The protocol and packet format is simple. And, it boasts ultra-low power consumption and long battery life.

Bluetooth Bluetooth (www.bluetooth.org, www.bluetooth.com) is another PAN technology. The Bluetooth Special Interest Group (SIG) manages the standard. IEEE 802.15.1 also covers it. Bluetooth primarily is used in wireless headsets for cell phones. It’s also used in some laptops, printers, wireless speakers, digital cameras, wireless keyboards and mice, and video games. Bluetooth Low Energy, which has a simpler design, targets health and medical applications. It effectively competes with ANT+.

Bluetooth operates in the 2.4 -Hz ISM band and uses frequency-hopping spread spectrum with Gaussian frequency shift keying (GFSK), differential quadrature phase shift keying (DQPSK), or eight-phase-shift differential phase-shift keying (8DPSK) modulation. The basic data gross rate is 1 Mbit/s for GFSK, 2 Mbits/s for DQPSK, and 3 Mbits/s for 8DPSK. There are also three power classes of 0 dBm (1 mW), 4 dBm (2.5 mW), and 20 dBm (100 mW), which essentially determines range. Standard range is about 10 meters and up to 100 meters at maximum power with a clear path.

Bluetooth is also capable of forming simple networks of up to seven devices. Called piconets, these PANs aren’t widely used. The peer-to-peer communications mode is the most common. The Bluetooth SIG defines multiple “profiles” or software applications that have been certified for interoperability among vendor chips, modules, and software.

Cellular With services from most network carriers, cellular radio provides data transmission capability for machine-to-machine (M2M) applications. M2M is used for remote monitoring and control. Cellular radio modules are widely available to build into other equipment (Fig. 1). Most of the standard technologies are used, such as GSM/GPRS/EDGE/WCDMA/HSPA on the AT&T and T-Mobile networks and cdma2000/EV-DO on the Verizon and Sprint networks.

1. Put a cell phone in your product. The Sierra Wireless AirPrime SL808x series is a full UMTS/WCDMA/HSDPA data cell phone designed to be embedded into other products. The module measures 25 by 30 mm, and it can transfer data downloads up to 3.6 Mbits/s.

LTE capability is also being made available for higher-speed

applications like HD video surveillance. Otherwise, data rates are

usually low (< 1 Mbit/s). The working range is from 1 to 10 km,

which is the range of most cell sites today.

IEEE 802.15.4 IEEE 802.15.4 is designed to support peer-to-peer links as well as wireless sensor networks. The standard defines the basic physical layer (PHY), including frequency range, modulation, data rates, and frame format, and the media access control (MAC) layer. Separate protocol stacks are then designed to use the basic PHY and MAC. Several wireless standards use the 802.15.4 standard as the PHY/MAC base, including ISA100, Wireless HART, ZigBee, and 6LoPAN.

The standard defines three basic frequency ranges. The most widely used is the worldwide 2.4-GHz ISM band (16 channels). The basic data rate is 250 kbits/s. Another range is the 902- to 928-MHz ISM band in the U.S. (10 channels). The data rate is 40 kbits/s or 250 kbits/s. Then there’s the European 868-MHz band (one channel) with a data rate of 20 kbits/s.

All three ranges use direct sequence spread spectrum (DSSS) with either binary phase-shift keying (BPSK) or offset quadrature phase-shift keying (QPSK) modulation. The multiple access mode is carrier sense multiple access with collision avoidance (CSMA-CA). The minimum defined power levels are –3 dBm (0.5 mW). The most common power level is 0 dBm. A 20-dBm level is defined for longer-range applications. Typical range is less than 10 meters.

IEEE 802.22 Also known as the Wireless Regional Area Network (WRAN) standard, IEEE 802.22 is one of the IEEE’s newest wireless standards. It’s designed to be used in the license-free unused broadcast TV channels called white space. These 6-MHz channels occupy the frequency range from 470 MHz to 698 MHz. Their availability varies from location to location. The standard isn’t widely used yet, though. White space radios use proprietary protocols and wireless standards.

Because of the potential for interference to TV stations, 802.22 radios must meet strict requirements and use cognitive radio techniques to find an unused channel. The radios use frequency-agile circuitry to scan for unused channels and to listen for potential interfering signals. They also use a TV white space database to determine the optimum place to be for the best results without interfering with other communications.

This standard is designed for fixed wireless broadband connections. The basestations talk to multiple fixed-location consumer radios for Internet access or other services. They would compete with cable TV and phone companies and/or provide broadband connectivity in rural areas underserved by other companies. While mobile operation is possible, most radios will be fixed.

The standard uses orthogonal frequency-division multiplexing (OFDM) to provide spectral efficiency sufficient to supply multiple user channels with a minimum of 1.5-Mbit/s download speed and 384-kbit/s upload speed. The maximum possible data rate per 6-MHz channel ranges from 18 to 22 Mbits/s. The great advantage of 802.22 is its use of the VHF and low UHF frequencies, which offer very long-range connections. With the maximum allowed 4 W of effective isotropic radiated power (EIRP), a basestation range of 100 km (almost 60 miles) is possible.

ISA100a Developed by the International Society of Automation, ISA100a is designed for industrial process control and factory automation. It uses the 802.15.4 PHY and MAC but adds special features for security, reliability, feedback control, and other industrial requirements.

Infrared Infrared (IR) wireless technology uses light instead of radio for its connectivity. Infrared is low-frequency, invisible light that can serve as a carrier of high-speed digital data. The primary wavelength range is 850 to 940 µm. The transmitter is an IR LED, and the receiver is a diode photodetector and amplifier. The light wave is usually modulated with a high-frequency signal that is, in turn, coded and modulated by the digital data to be transmitted.

Most TV sets and consumer electronic devices use an IR remote control, which has a range of several meters and a narrow angle (<30°) of transmission. Various protocols and coding schemes are used. Also, IR devices must have a clear line-of-sight path for a connection.

There is a separate standard for data transmission called IrDA. The Infrared Data Association sets and maintains its specifications. IrDA exists in many versions mainly delineated by their data rate. Data rates range from a low of 9.6 to 115.2 kbits/s in increments to 4 Mbits/s, 16 Mbits/s, 96 Mbits/s, and 512 Mbits/s to 1 Gbit/s. New standards for rates of 5 and 10 Gbits/s are in development. The range is less than a meter.

IR has several key benefits. First, since it’s light instead of a radio wave, it isn’t susceptible to radio interference of any kind. Second, it’s highly secure since its signals are difficult to intercept or spoof.

IR once was widely used in laptops, PDAs, some cameras, and printers. It has mainly been replaced by other wireless technologies like Bluetooth and Wi-Fi. It is still widely used in consumer remote controls, but new RF remote controls are gradually replacing the IR remotes in some consumer equipment. Some designs include both IR and RF.

ISM Band Most of these standards use the unlicensed ISM bands set aside by the Federal Communications Commission (FCC) in Part 15 of the Code of Federal Regulations (CFR) 47. The most widely used ISM band is the 2.4- to 2.483-GHz band, which is used by cordless phones, Wi-Fi, Bluetooth, 802.15.4 radios, and many other devices. The second most widely used band is the 902- to 928-MHz band, with 915 MHz being a sweet spot.

Other popular ISM frequencies are 315 MHz for garage door openers and remote keyless entry (RKE) applications and 433 MHz for remote temperature monitoring. Other less used frequencies are 13.56 MHz, 27 MHz, and 72 MHz. For full consideration of all available bands, see Part 15, which is a must-have document for anyone designing and building short-range wireless products. It’s available through the U.S. Government Printing Office.

For many simple wireless applications that do not require complex network connections, security, or other custom features, simple proprietary protocols can be designed. Many vendors of ISM band transceivers offer standard protocol support and development systems that can be used to develop a protocol for a specific application.

Near-Field Communications Near-field communications (NFC) is an ultra-short-range technology that was designed for secure payment transactions and similar applications. It maximum range is about 20 cm, with 4 to 5 cm being a typical link distance. This short distance greatly enhances the security of the connection, which is also usually encrypted. Many smart phones include NFC, and many others are expected to get it eventually. The goal is to implement NFC payment systems where consumers can tap a payment terminal with their cell phone instead of using a credit card.

NFC uses the 13.56-MHz ISM frequency. At this low frequency, the transmit and receive loop antennas function mainly as the primary and secondary windings of a transformer, respectively. The transmission is by the magnetic field of the signal rather than the accompanying electric field, which is less dominant in the near field.

NFC is also used to read tags that are powered up by the interrogation of an NFC transmitted signal. The unpowered tags convert the RF signal into dc that powers a processor and memory that can provide information related to the application. Numerous NFC transceiver chips are available to implement new applications, and multiple standards exist:

RFID tags are small, flat, and cheap and can be attached to anything that must be tracked or identified. They have replaced bar codes in some applications. RFID uses the 13.56-MHz ISM frequency, but other frequencies are also used including 125 kHz, 134.5 kHz, and frequencies in the 902- to 928-MHz range. Multiple ISO/IEC standards exist.

6LoWPAN 6LoWPAN means IPv6 protocol over low-power wireless PANs. Developed by the Internet Engineering Task Force (ITEF), it provides a way to transmit IPv6 and IPv4 Internet Protocols over low-power wireless point-to-point (P2P) links and mesh networks. This standard (RFC4944) also permits the implementation of the Internet of Things on even the smallest and remote devices.

The protocol provides encapsulation and header compression routines for use with 802.15.4 radios. The IETF is said to be working on a version of this protocol for Bluetooth. If your wireless device must have an Internet connection, this is your technology of choice.

Ultra Wideband Ultra Wideband (UWB) uses the 3.1- to 10.6-GHz range to provide high-speed data connectivity for PCs, laptops, set-top boxes, and other devices. The band is divided up into multiple 528-MHz wide channels. OFDM is used to provide data rates from 53 Mbits/s to 480 Mbits/s. The WiMedia Alliance originally defined the standard.

Devices use ultra-low power to prevent interference with services in the assigned band. This restricts range to a maximum of about 10 meters. In most applications, the range is less than a few meters so the highest data rates can be used. UWB is used primarily in video applications such as TV sets, cameras, laptops, and video monitors in docking stations.

Wi-Fi Wi-Fi is the commercial name of the wireless technology defined by the IEEE 802.11 standards. Next to Bluetooth, Wi-Fi is by far the most widespread wireless technology. It is in smart phones, laptops, tablets, and ultrabooks. It’s also used in TV sets, video accessories, and home wireless routers. It’s deployed in many industrial applications as well. Wi-Fi is now showing up in cellular networks where carriers are using it to offload some data traffic like video that clogs the network.

Wi-Fi has been around since the late 1990s when a version called 802.11b because popular. It offered up to 11-Mbit/s data rates in the 2.4-GHz ISM band. Since then, new standards have been developed including 802.11a (5-GHz band), 802.11g, and 802.11n using OFDM to get speeds up to 54 and 300 Mbits/s under the most favorable conditions.

More recent standards include 802.11ac, which uses multiple-input multiple-output (MIMO) to deliver up to 3 Gbits/s in the 5-GHz ISM band. The 802.11ad standard is designed to deliver data rates up to 7 Gbits/s in the unlicensed 60-GHz band. You will hear of 802.11ad referred to as WiGig, its commercial designation. Its main use is video transfer in consumer electronic systems with HDTV and in high-resolution video monitors.

Wi-Fi is readily available in chip form or as complete drop-in modules. The range is up to 100 meters under the best line-of-sight conditions. This is a great option where longer range and high speeds are needed for the application.

Wireless HART HART is the Highway Addressable Remote Transducer protocol, a wired networking technology widely used in industry for sensor and actuator monitoring and control. Wireless HART is the wireless version of this standard. The base of it is the 802.15.4 standard in the 2.4-GHz band. The HART protocol is a software application on the wireless transceivers.

WirelessHD WirelessHD is another high-speed technology using the 60-GHz unlicensed band. It also is supported by the IEEE 802.15.3c standard. It can achieve speeds to 28 Gbits/s over a range that tops out at about 10 meters in a straight, unblocked path. It is designed mainly for wireless video displays using interfaces like HDMI or DisplayPort, HDTV sets, and related consumer devices like DVRs and DVD players.

WirelessUSB WirelessUSB is a proprietary standard from Cypress Semiconductor. It is not the same as Wireless USB, which is a wireless version of the popular wired USB interface standard. Wireless USB generally refers to Ultra Wideband. WirelessUSB NL uses the 2.4-GHz band with GFSK modulation. Data rates up to 1 Mbit/s are possible. This ultra-low-power technology is designed primarily for human interface devices (HIDs) like keyboards, mice, and game controllers. It uses a simple protocol.

Another version of WirelessUSB designated LP uses the same 2.4-GHz band but employs direct-sequence spread-spectrum (DSSS) at a lower speed (up 250 kbits/s) for greater range and reliability in the presence of noise. The LP version can also implement the GFSK 1-Mbit/s feature if desired. The maximum power level is 4 dBm, and a 16-bit cyclic redundancy code (CRC) is used for error detection. Versions of the transceivers can be had with an on-chip Cypress PSoC microcontroller.

ZigBee ZigBee is the standard of the ZigBee Alliance. It is a software protocol and technology that uses the 802.15.4 transceiver as a base. It provides a complete protocol stack designed to implement multiple types of radio networks that include point-to-point, tree, star, and point-to-multipoint (Fig. 2). Its main feature is the ability to build large mesh networks for sensor monitoring. And, it can handle up to 65,000 nodes.

2. The CEL MeshConnect module using Ember EM357 devices makes ZigBee wireless applications fast and easy to implement.

ZigBee also provides profiles or software routines that implement

specific applications for consumer home automation, building

automation, and industrial control. Examples include building automation

for lighting and HVAC control, as well as smart meters that implement

home-area network connections in automated electric meters.

Low-power versions are used in health care for remote patient monitoring and similar applications. A lighting profile is available for LED lighting fixtures and their control. There is also a ZigBee remote control profile to implement an RF rather than infrared remote control for consumer TV and other devices. ZigBee is used in factory automation and can be used in many M2M and Internet of Things applications as well.

Z-Wave Z-Wave is a proprietary wireless standard originally developed by Zensys, which is now a part of Sigma Designs. Recently, the International Telecommunications Union (ITU) included the Z-Wave PHY and MAC layers as an option in its G.9959 standard, which defines a set of guidelines for sub-1-GHz narrowband wireless devices.

Z-Wave is a wireless mesh networking technology. A Z-Wave network can have up to 232 nodes. The wireless transceivers operate in the ISM band on a frequency of 908.42 MHz in the U.S. and Canada but use other frequencies depending on the country’s rules and regulations. The modulation is GFSK. Data rates available include 9600 bits/s and 40 kbits/s. Output power is 1 mW or 0 dBm. In free space conditions, a range of up to 30 meters is possible. The through-wall range is considerably shorter. The main application for Z-Wave has been home automation and control of lighting, thermostats, smoke detectors, door locks, appliances, and security systems.

dB loss = 37 dB + 20log(f) + 20log(d)

In this formula, f is the operating frequency in MHz and d is the range in miles. For example, the path loss of a 900-MHz signal over 2 miles is:

dB loss = 37 + 20log(900) + 2-log(2) = 37 + 59 + 6 = 102 dB

Remember, this is the free space loss meaning a direct line-of-sight transmission with no obstacles. Trees, walls, or other possible barriers will significantly increase the path loss.

Next, manipulate the following formula to ensure a link connection:

Receive sensitivity (minimum) = transmit power (dBm) + transmit antenna gain (dB) + receive antenna gain (dB) – path loss (dB) – fade margin (dB)

Fade margin is an estimate or best guess. It should be no less than, say, 5 dB, but it could be up to 40 dB to ensure 100 % link reliability. Other losses like transmission line loss should also be subtracted.

The resulting figure should be greater than the receiver sensitivity. Receiver sensitivities range from a low of about –70 dBm to –130 dBm or more. Assume a transmit power of 4 dBm, antenna gains of 0 dB, and the 102-dB path loss calculated above. Assume a fade margin of 10 dB. The link characteristics then are:

4 + 0 + 0 – 102 – 10 = –108 dB

To obtain a reliable link, the receiver sensitivity must be greater than –108 dBm.

In the automotive space, remote keyless entry (RKE) and remote start are the most widespread. Wireless remote reading of tire pressures is one interesting feature on some vehicles. GPS navigation has also become a widespread option on many cars. Radar, a prime wireless technology, is finding considerable application in speed control and automated braking.

Home consumer electronic products are loaded with wireless. Virtually all entertainment products such as HDTVs, DVRs, and cable and satellite boxes have remote controls. They’re still primarily IR, but RF wireless is now being incorporated. Other wireless applications include baby monitors, toys, games, and hobbies.

There are also wireless thermostats, remote thermometers and other weather monitors, garage door openers, security systems, and energy metering and affiliated monitors. Many homes now have wireless Internet access with a Wi-Fi router. There may even be a cellular femto cell to boost mobile coverage in the home. Cell phones, cordless phones, Bluetooth, and Wi-Fi are widespread.

Commercial applications include wireless temperature monitoring, wireless thermostats, and lighting control. Some video surveillance cameras use a wireless rather than coax link. Wireless payment systems in cell phones promises to revolutionize commerce.

In industry, wireless has gradually replaced wired connections. Remote monitoring of physical characteristics such as temperature, flow, pressure, proximity, and liquid level is common. Wireless control of machine tools, robots, and industrial processes simplifies and facilitates economy and convenience in industrial settings. M2M technology has opened the door to many new applications such as monitoring vending machines and vehicle location (GPS). The Internet of Things is mostly wireless. RFID has made it possible to more conveniently track and locate almost anything.

This great rush to make everything wireless has produced a flood of different wireless technologies and protocols. Some were established primarily for one application, while others are more general and have many uses.

Table Of Contents

- Wireless Technology Choices

- Critical Design Factors

- Typical Applications

- Checklist For Selecting A Wireless Technology

- References

Wireless Technology Choices

Many wireless technologies are available, and most of them are standardized (see the table). Some were developed for specific applications while others are flexible and generic. Most are also implemented in small, low-cost IC form or in complete drop-in modules. Selecting the technology for a given application is the challenge.ANT+ ANT and ANT+ are proprietary wireless sensor network technologies used in the collection and transfer of sensor data. As a type of personal-area network (PAN), ANT’s primary applications include sports, wellness, and home health. For example, it’s used in heart-rate monitors, speedometers, calorimeters, blood pressure monitors, position tracking, homing devices, and thermometers. Typical radios are built into sports watches and equipment like workout machines.

The technology divides the 2.4-GHz industrial, scientific, and medical (ISM) band into 1-MHz channels. The radios have a basic data rate of 1 Mbit/s. A time division multiplexing (TDM) scheme accommodates multiple sensors. ANT+ supports star, tree, mesh, and peer-to-peer topologies. The protocol and packet format is simple. And, it boasts ultra-low power consumption and long battery life.

Bluetooth Bluetooth (www.bluetooth.org, www.bluetooth.com) is another PAN technology. The Bluetooth Special Interest Group (SIG) manages the standard. IEEE 802.15.1 also covers it. Bluetooth primarily is used in wireless headsets for cell phones. It’s also used in some laptops, printers, wireless speakers, digital cameras, wireless keyboards and mice, and video games. Bluetooth Low Energy, which has a simpler design, targets health and medical applications. It effectively competes with ANT+.

Bluetooth operates in the 2.4 -Hz ISM band and uses frequency-hopping spread spectrum with Gaussian frequency shift keying (GFSK), differential quadrature phase shift keying (DQPSK), or eight-phase-shift differential phase-shift keying (8DPSK) modulation. The basic data gross rate is 1 Mbit/s for GFSK, 2 Mbits/s for DQPSK, and 3 Mbits/s for 8DPSK. There are also three power classes of 0 dBm (1 mW), 4 dBm (2.5 mW), and 20 dBm (100 mW), which essentially determines range. Standard range is about 10 meters and up to 100 meters at maximum power with a clear path.

Bluetooth is also capable of forming simple networks of up to seven devices. Called piconets, these PANs aren’t widely used. The peer-to-peer communications mode is the most common. The Bluetooth SIG defines multiple “profiles” or software applications that have been certified for interoperability among vendor chips, modules, and software.

Cellular With services from most network carriers, cellular radio provides data transmission capability for machine-to-machine (M2M) applications. M2M is used for remote monitoring and control. Cellular radio modules are widely available to build into other equipment (Fig. 1). Most of the standard technologies are used, such as GSM/GPRS/EDGE/WCDMA/HSPA on the AT&T and T-Mobile networks and cdma2000/EV-DO on the Verizon and Sprint networks.

1. Put a cell phone in your product. The Sierra Wireless AirPrime SL808x series is a full UMTS/WCDMA/HSDPA data cell phone designed to be embedded into other products. The module measures 25 by 30 mm, and it can transfer data downloads up to 3.6 Mbits/s.

IEEE 802.15.4 IEEE 802.15.4 is designed to support peer-to-peer links as well as wireless sensor networks. The standard defines the basic physical layer (PHY), including frequency range, modulation, data rates, and frame format, and the media access control (MAC) layer. Separate protocol stacks are then designed to use the basic PHY and MAC. Several wireless standards use the 802.15.4 standard as the PHY/MAC base, including ISA100, Wireless HART, ZigBee, and 6LoPAN.

The standard defines three basic frequency ranges. The most widely used is the worldwide 2.4-GHz ISM band (16 channels). The basic data rate is 250 kbits/s. Another range is the 902- to 928-MHz ISM band in the U.S. (10 channels). The data rate is 40 kbits/s or 250 kbits/s. Then there’s the European 868-MHz band (one channel) with a data rate of 20 kbits/s.

All three ranges use direct sequence spread spectrum (DSSS) with either binary phase-shift keying (BPSK) or offset quadrature phase-shift keying (QPSK) modulation. The multiple access mode is carrier sense multiple access with collision avoidance (CSMA-CA). The minimum defined power levels are –3 dBm (0.5 mW). The most common power level is 0 dBm. A 20-dBm level is defined for longer-range applications. Typical range is less than 10 meters.

IEEE 802.22 Also known as the Wireless Regional Area Network (WRAN) standard, IEEE 802.22 is one of the IEEE’s newest wireless standards. It’s designed to be used in the license-free unused broadcast TV channels called white space. These 6-MHz channels occupy the frequency range from 470 MHz to 698 MHz. Their availability varies from location to location. The standard isn’t widely used yet, though. White space radios use proprietary protocols and wireless standards.

Because of the potential for interference to TV stations, 802.22 radios must meet strict requirements and use cognitive radio techniques to find an unused channel. The radios use frequency-agile circuitry to scan for unused channels and to listen for potential interfering signals. They also use a TV white space database to determine the optimum place to be for the best results without interfering with other communications.

This standard is designed for fixed wireless broadband connections. The basestations talk to multiple fixed-location consumer radios for Internet access or other services. They would compete with cable TV and phone companies and/or provide broadband connectivity in rural areas underserved by other companies. While mobile operation is possible, most radios will be fixed.

The standard uses orthogonal frequency-division multiplexing (OFDM) to provide spectral efficiency sufficient to supply multiple user channels with a minimum of 1.5-Mbit/s download speed and 384-kbit/s upload speed. The maximum possible data rate per 6-MHz channel ranges from 18 to 22 Mbits/s. The great advantage of 802.22 is its use of the VHF and low UHF frequencies, which offer very long-range connections. With the maximum allowed 4 W of effective isotropic radiated power (EIRP), a basestation range of 100 km (almost 60 miles) is possible.

ISA100a Developed by the International Society of Automation, ISA100a is designed for industrial process control and factory automation. It uses the 802.15.4 PHY and MAC but adds special features for security, reliability, feedback control, and other industrial requirements.

Infrared Infrared (IR) wireless technology uses light instead of radio for its connectivity. Infrared is low-frequency, invisible light that can serve as a carrier of high-speed digital data. The primary wavelength range is 850 to 940 µm. The transmitter is an IR LED, and the receiver is a diode photodetector and amplifier. The light wave is usually modulated with a high-frequency signal that is, in turn, coded and modulated by the digital data to be transmitted.

Most TV sets and consumer electronic devices use an IR remote control, which has a range of several meters and a narrow angle (<30°) of transmission. Various protocols and coding schemes are used. Also, IR devices must have a clear line-of-sight path for a connection.

There is a separate standard for data transmission called IrDA. The Infrared Data Association sets and maintains its specifications. IrDA exists in many versions mainly delineated by their data rate. Data rates range from a low of 9.6 to 115.2 kbits/s in increments to 4 Mbits/s, 16 Mbits/s, 96 Mbits/s, and 512 Mbits/s to 1 Gbit/s. New standards for rates of 5 and 10 Gbits/s are in development. The range is less than a meter.

IR has several key benefits. First, since it’s light instead of a radio wave, it isn’t susceptible to radio interference of any kind. Second, it’s highly secure since its signals are difficult to intercept or spoof.

IR once was widely used in laptops, PDAs, some cameras, and printers. It has mainly been replaced by other wireless technologies like Bluetooth and Wi-Fi. It is still widely used in consumer remote controls, but new RF remote controls are gradually replacing the IR remotes in some consumer equipment. Some designs include both IR and RF.

ISM Band Most of these standards use the unlicensed ISM bands set aside by the Federal Communications Commission (FCC) in Part 15 of the Code of Federal Regulations (CFR) 47. The most widely used ISM band is the 2.4- to 2.483-GHz band, which is used by cordless phones, Wi-Fi, Bluetooth, 802.15.4 radios, and many other devices. The second most widely used band is the 902- to 928-MHz band, with 915 MHz being a sweet spot.

Other popular ISM frequencies are 315 MHz for garage door openers and remote keyless entry (RKE) applications and 433 MHz for remote temperature monitoring. Other less used frequencies are 13.56 MHz, 27 MHz, and 72 MHz. For full consideration of all available bands, see Part 15, which is a must-have document for anyone designing and building short-range wireless products. It’s available through the U.S. Government Printing Office.

For many simple wireless applications that do not require complex network connections, security, or other custom features, simple proprietary protocols can be designed. Many vendors of ISM band transceivers offer standard protocol support and development systems that can be used to develop a protocol for a specific application.

Near-Field Communications Near-field communications (NFC) is an ultra-short-range technology that was designed for secure payment transactions and similar applications. It maximum range is about 20 cm, with 4 to 5 cm being a typical link distance. This short distance greatly enhances the security of the connection, which is also usually encrypted. Many smart phones include NFC, and many others are expected to get it eventually. The goal is to implement NFC payment systems where consumers can tap a payment terminal with their cell phone instead of using a credit card.

NFC uses the 13.56-MHz ISM frequency. At this low frequency, the transmit and receive loop antennas function mainly as the primary and secondary windings of a transformer, respectively. The transmission is by the magnetic field of the signal rather than the accompanying electric field, which is less dominant in the near field.

NFC is also used to read tags that are powered up by the interrogation of an NFC transmitted signal. The unpowered tags convert the RF signal into dc that powers a processor and memory that can provide information related to the application. Numerous NFC transceiver chips are available to implement new applications, and multiple standards exist:

- ISO/IEC 14443A

- ISO/IEC 14443B

- JIS X6319-4

- ECMA 340, designated NFCIP-1

- ISO/IEC as 18092

- ECMA 352, called NFCIP-2, and ISO/IEC 23917

RFID tags are small, flat, and cheap and can be attached to anything that must be tracked or identified. They have replaced bar codes in some applications. RFID uses the 13.56-MHz ISM frequency, but other frequencies are also used including 125 kHz, 134.5 kHz, and frequencies in the 902- to 928-MHz range. Multiple ISO/IEC standards exist.

6LoWPAN 6LoWPAN means IPv6 protocol over low-power wireless PANs. Developed by the Internet Engineering Task Force (ITEF), it provides a way to transmit IPv6 and IPv4 Internet Protocols over low-power wireless point-to-point (P2P) links and mesh networks. This standard (RFC4944) also permits the implementation of the Internet of Things on even the smallest and remote devices.

The protocol provides encapsulation and header compression routines for use with 802.15.4 radios. The IETF is said to be working on a version of this protocol for Bluetooth. If your wireless device must have an Internet connection, this is your technology of choice.

Ultra Wideband Ultra Wideband (UWB) uses the 3.1- to 10.6-GHz range to provide high-speed data connectivity for PCs, laptops, set-top boxes, and other devices. The band is divided up into multiple 528-MHz wide channels. OFDM is used to provide data rates from 53 Mbits/s to 480 Mbits/s. The WiMedia Alliance originally defined the standard.

Devices use ultra-low power to prevent interference with services in the assigned band. This restricts range to a maximum of about 10 meters. In most applications, the range is less than a few meters so the highest data rates can be used. UWB is used primarily in video applications such as TV sets, cameras, laptops, and video monitors in docking stations.

Wi-Fi Wi-Fi is the commercial name of the wireless technology defined by the IEEE 802.11 standards. Next to Bluetooth, Wi-Fi is by far the most widespread wireless technology. It is in smart phones, laptops, tablets, and ultrabooks. It’s also used in TV sets, video accessories, and home wireless routers. It’s deployed in many industrial applications as well. Wi-Fi is now showing up in cellular networks where carriers are using it to offload some data traffic like video that clogs the network.

Wi-Fi has been around since the late 1990s when a version called 802.11b because popular. It offered up to 11-Mbit/s data rates in the 2.4-GHz ISM band. Since then, new standards have been developed including 802.11a (5-GHz band), 802.11g, and 802.11n using OFDM to get speeds up to 54 and 300 Mbits/s under the most favorable conditions.